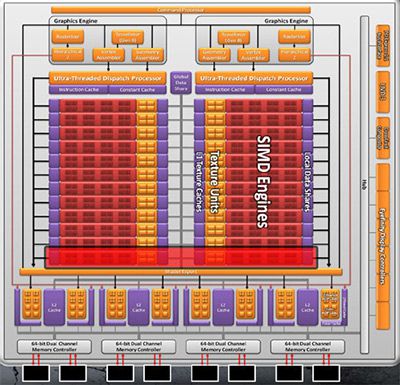

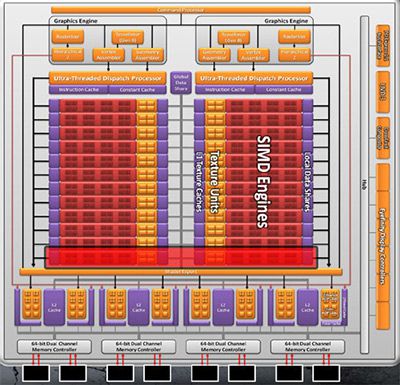

Hardware enthusiasts are always on the look out for ways of getting something for nothing or expanding the use from a given piece of equipment. Overclocking, soft-modding, anything goes for squeezing those precious few flops or frames out of their hardware, or making it perform like a much more expensive model. AMD CPUs with core unlocking, converting NVIDIA GeForce cards into Quadros, even using software to gain added abilities with soundcards. A couple days ago, a softmod was released that allowed the conversion of an AMD HD 6950 into a 6970, not just a mere overclock, but actually re-enabling the extra shaders as well.

You can read the rest of our post and discuss here.

You can read the rest of our post and discuss here.