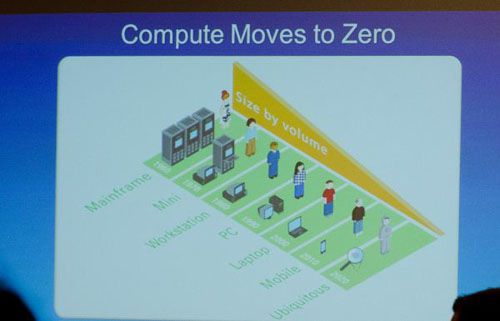

It's fun to look at a trend and predict the future. However that's the only thing that Intel is doing here; Futurology.

Like any good science fiction novel we expect the future to keep using today's technologies. I say however that the current computer technology cannot be stretched much further. The central processing unit isn't the only player on today's computer architecture. It's useless without a bus, without storage and memory, it's becoming also increasingly useless without all sorts of communications adapters. All taking their space and all contributing their current inefficiency to these super fast processor that rarely will have a chance to show their true potential.

In fact we see that happening today already. With multi-threading development being a complex discipline, we even witness modern software being developed that doesn't use a processor to its fullest. Most applications that shouldn't are single-threaded. Our processors are currently being under-utilized most thanks to software development methodologies that couldn't keep up with the speed at which the computer technology developed. So hardware and software alike can contribute to a situation in which a processor is much like a racing car in a traffic jam. It could go faster, but it isn't allowed.

It's important for the whole of the computer industry to play catch up with development in processor power. This includes, btw, not having to sell your kids for an SSD, or not having to waste 600 Watts on a passable system with a passable modern graphics card.

Meanwhile, smaller processors sure mean soon enough computers will be everywhere. But it's still left to be seen whether this is a necessity or a desire. Ubiquity may as well be over rated. What it won't mean for sure is that the least developed and poorer countries will benefit. Their problems are structural in nature and won't be solved with a stream of cheap technology, when they haven't even even laid out a communications infrastructure and are having trouble forming good professionals in the various fields.