From our front-page news:

When Intel first announced its plans to launch its own discrete graphics card, Larrabee, it seemed like everyone had an opinion. If you talked to a company such as AMD or NVIDIA, laughter, doubt, and more laughter was sure to come about. The problem, of course, is that Intel has never been known for graphics, and its integrated solutions get more flack than praise, so to picture the world's largest CPU vendor building a quality GPU... it seemed unlikely.

The doubt surrounding Larrabee was even more pronounced this past September, during the company's annual Developer Forum, held in San Francisco. There, Intel showed off a real-time ray-tracing demo, and for the most part, no one was impressed. The demo was based on Enemy Territory, a game based on an even older game engine. So needless to say, hopes were dampened even further after this lackluster "preview".

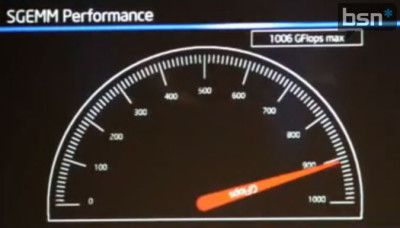

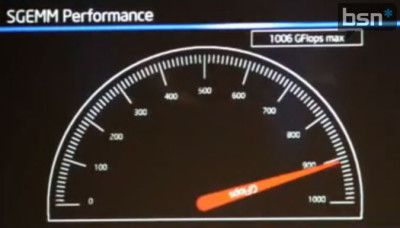

But, hope is far from lost, as Intel has proven at the recent Super Computer 09 conference, held in Portland, Oregon. There, the company showed off Larrabee's compute performance, which hit 825 GFLOPS. During the showing, the engineers tweaked what they needed to, and the result was a breaking of the 1 TFLOP barrier. But here's the kicker. This was achieved with a real benchmark that people in the HPC community rely on heavily, SGEMM. What did NVIDIA's GeForce GTX 285 score in the same test? 425 GFLOPS. Yes, less than half.

This is in all regards impressive, because if Intel's card surpasses the competition by such a large degree where complex mathematics is concerned, then AMD and NVIDIA might actually have something to be concerned over. Unfortunately, such high computational performance doesn't equal likewise impressive gaming performance, so we're still going to have to wait a while before we can see how it stacks up there. But, this is still a good sign. A very good sign.

As you can see for yourself, Larrabee is finally starting to produce some positive results. Even though the company had silicon for over a year and a half, the performance simply wasn't there and naturally, whenever a development hits a snag - you either give up or give it all you've got. After hearing that the "champions of Intel" moved from the CPU development into the Larrabee project, we can now say that Intel will deliver Larrabee at the price the company is ready to pay for.

Source: Bright Side of News*

The doubt surrounding Larrabee was even more pronounced this past September, during the company's annual Developer Forum, held in San Francisco. There, Intel showed off a real-time ray-tracing demo, and for the most part, no one was impressed. The demo was based on Enemy Territory, a game based on an even older game engine. So needless to say, hopes were dampened even further after this lackluster "preview".

But, hope is far from lost, as Intel has proven at the recent Super Computer 09 conference, held in Portland, Oregon. There, the company showed off Larrabee's compute performance, which hit 825 GFLOPS. During the showing, the engineers tweaked what they needed to, and the result was a breaking of the 1 TFLOP barrier. But here's the kicker. This was achieved with a real benchmark that people in the HPC community rely on heavily, SGEMM. What did NVIDIA's GeForce GTX 285 score in the same test? 425 GFLOPS. Yes, less than half.

This is in all regards impressive, because if Intel's card surpasses the competition by such a large degree where complex mathematics is concerned, then AMD and NVIDIA might actually have something to be concerned over. Unfortunately, such high computational performance doesn't equal likewise impressive gaming performance, so we're still going to have to wait a while before we can see how it stacks up there. But, this is still a good sign. A very good sign.

As you can see for yourself, Larrabee is finally starting to produce some positive results. Even though the company had silicon for over a year and a half, the performance simply wasn't there and naturally, whenever a development hits a snag - you either give up or give it all you've got. After hearing that the "champions of Intel" moved from the CPU development into the Larrabee project, we can now say that Intel will deliver Larrabee at the price the company is ready to pay for.

Source: Bright Side of News*