From our front-page news:

Overclocking. Hobby for some, life for others. In the past, I used to take overclocking seriously to a certain degree, but to become truly successful at it, you have no choice but to devote a lot of time and energy to studying up and sometimes even modding whatever product it is you're trying to overclock. That's been especially true with motherboards in the past, and it's never too uncommon to see extra wires soldered from one point to another in order to get the upper-hand in a benchmarking run.

This isn't that extreme, but to offer even more control to their customers, EVGA has released their long-awaited "GPU Voltage Tuner", which does just as it sounds. With CPU overclocking, the option to increase the voltage can be found right in the BIOS... it's far from being complicated. With GPU overclocking, the process could prove to be much more complex, since the option for voltage control has never been easily available.

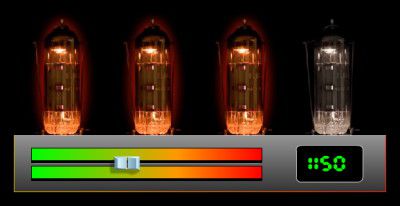

With this new tool, which exclusively supports EVGA's own GTX 200-series, the user is able to increase the voltage in what seems to be three or four steps total, and as it stands right now, the red area, as seen in the below picture, is not yet enabled, and I can jump to conclusions to understand why. With this tool, heat is going to increase, so it's imperative to make sure you have superb airflow. If your card dies when using this tool, it doesn't look like it's warrantied, so be careful out there!

This utility allows you to set a custom voltage level for your GTX 295, 280 or 260 graphics card. (see below for full supported list) Using this utility may allow you to increase your clockspeeds beyond what was capable before, and when coupled with the EVGA Precision Overclocking Utility, it is now easier than ever to get the most from your card. With these features and more it is clear why EVGA is the performance leader!

Source: EVGA GPU Voltage Tuner

This isn't that extreme, but to offer even more control to their customers, EVGA has released their long-awaited "GPU Voltage Tuner", which does just as it sounds. With CPU overclocking, the option to increase the voltage can be found right in the BIOS... it's far from being complicated. With GPU overclocking, the process could prove to be much more complex, since the option for voltage control has never been easily available.

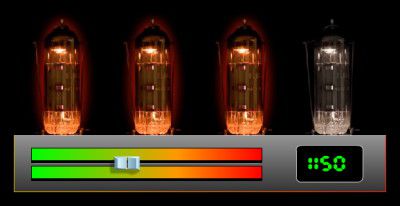

With this new tool, which exclusively supports EVGA's own GTX 200-series, the user is able to increase the voltage in what seems to be three or four steps total, and as it stands right now, the red area, as seen in the below picture, is not yet enabled, and I can jump to conclusions to understand why. With this tool, heat is going to increase, so it's imperative to make sure you have superb airflow. If your card dies when using this tool, it doesn't look like it's warrantied, so be careful out there!

This utility allows you to set a custom voltage level for your GTX 295, 280 or 260 graphics card. (see below for full supported list) Using this utility may allow you to increase your clockspeeds beyond what was capable before, and when coupled with the EVGA Precision Overclocking Utility, it is now easier than ever to get the most from your card. With these features and more it is clear why EVGA is the performance leader!

Source: EVGA GPU Voltage Tuner