From our front-page news:

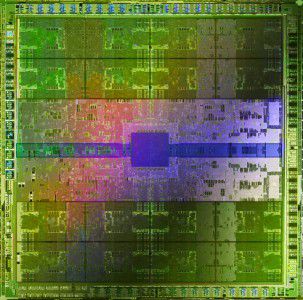

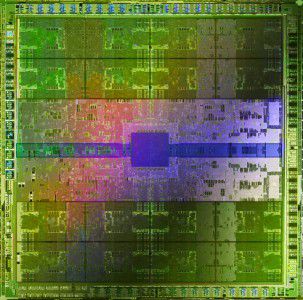

Earlier this week, NVIDIA unveiled their "Fermi" architecture, one that has a massive focus on computational graphics processors. As far as GPGPU (general purpose GPU) is concerned, NVIDIA has been at the forefront of pushing the technology. Others, such as ATI, hasn't been too focused on it until very recently, so in many regards, NVIDIA is a trailblazer. The adoption of GPGPU as a standard has been slow, but I for one am hoping to see it grow over time.

The reason I want to see GPGPU grow is this. We all have GPUs in our computers, and some of us run out and pick up the latest and greatest... cards that deliver some incredible gameplay performance. But how much of your time spent on a computer is actually for gaming? It'd be great to tap into the power of our GPUs for other uses, and to date, there are a few good examples of what can be done, such as video conversion/enhancement, and even password cracking.

No, I didn't go off-topic, per se, because it's NVIDIA who's pushing for a lot more of this in the future, as Fermi has been designed from the ground up to both be a killer architecture for gaming and GPGPU. According to their press release, the architecture has great support for C++, C, Fortran, OpenCL and DirectCompute and a few others, it adds ECC, has 8x the double precision computational power of previous generation GPUs and many other things. Think this is all marketing mumbo jumbo? Given that one laboratory has already opted to build a super computer with Fermi, I'd have to say that there is real potential here for a GPGPU explosion. Well, as long as Fermi does happen to be good for all-around computing, and isn't only good for video conversion and Folding apps.

Since Fermi was announced, there has been some humorous happenings. Charlie over at Semi-Accurate couldn't help but notice just how fake NVIDIA's show-off card was, and pointed out all the reasons why. And though NVIDIA e-mailed him to tell him that it was indeed a real, working sample, Fudzilla has supposedly been told by an NVIDIA VP that it was actually a mock-up. At the same time, Fudzilla was also told by GM of GeForce and ION, Drew Henry, that launch G300 cards will be the fastest GPUs on the market in terms of gaming performance, even beating out the newly-launched HD 5870. Things are certainly going to get exciting if that proves true.

"It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore," said Jen-Hsun Huang, co-founder and CEO of NVIDIA. "The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry."

Source: NVIDIA Press Release

The reason I want to see GPGPU grow is this. We all have GPUs in our computers, and some of us run out and pick up the latest and greatest... cards that deliver some incredible gameplay performance. But how much of your time spent on a computer is actually for gaming? It'd be great to tap into the power of our GPUs for other uses, and to date, there are a few good examples of what can be done, such as video conversion/enhancement, and even password cracking.

No, I didn't go off-topic, per se, because it's NVIDIA who's pushing for a lot more of this in the future, as Fermi has been designed from the ground up to both be a killer architecture for gaming and GPGPU. According to their press release, the architecture has great support for C++, C, Fortran, OpenCL and DirectCompute and a few others, it adds ECC, has 8x the double precision computational power of previous generation GPUs and many other things. Think this is all marketing mumbo jumbo? Given that one laboratory has already opted to build a super computer with Fermi, I'd have to say that there is real potential here for a GPGPU explosion. Well, as long as Fermi does happen to be good for all-around computing, and isn't only good for video conversion and Folding apps.

Since Fermi was announced, there has been some humorous happenings. Charlie over at Semi-Accurate couldn't help but notice just how fake NVIDIA's show-off card was, and pointed out all the reasons why. And though NVIDIA e-mailed him to tell him that it was indeed a real, working sample, Fudzilla has supposedly been told by an NVIDIA VP that it was actually a mock-up. At the same time, Fudzilla was also told by GM of GeForce and ION, Drew Henry, that launch G300 cards will be the fastest GPUs on the market in terms of gaming performance, even beating out the newly-launched HD 5870. Things are certainly going to get exciting if that proves true.

"It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore," said Jen-Hsun Huang, co-founder and CEO of NVIDIA. "The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry."

Source: NVIDIA Press Release